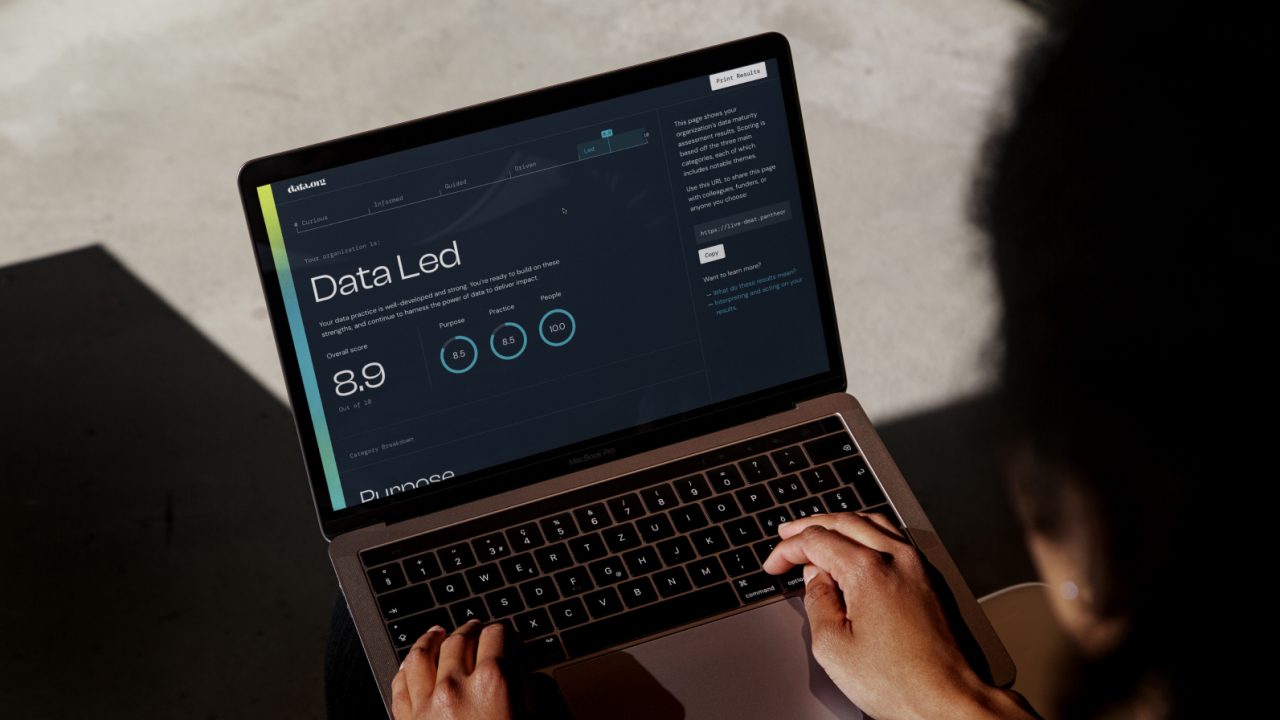

data.org launched the Data Maturity Assessment (DMA) in response to a question we’re regularly asked by social impact organizations: how do we know we are using data effectively? And, if we know we have areas to improve (and constrained resources), where do we begin?

Curious how your organization stacks up?

The Data Maturity Assessment serves as a pulse check, building on prior research and close engagement with social impact organizations and social sector funders. By offering clear results and links to relevant resources, the Assessment offers SIOs a sense of where they stand today and concrete steps to take to advance. The individual assessment data is not shared with any third parties, though the data in aggregate will be used to inform insights on data maturity in the social sector in an effort to drive increased understanding about how best to support organizations on their data journey.

In the spirit of releasing what we learn early and often, we wanted to share a few initial observations at this 100-day mark. We received over 300 responses; removing test or incomplete responses, we reviewed 270 to use for this first look.

- We received responses from over 10 countries across six continents.

- Responses came from a range of organizations: multi-laterals, governments at federal, regional, and local levels, social impact organizations in a wide range of sizes, and educational institutions from universities to K-12 public and charter schools.

- 60% of responses came from smaller organizations (fewer than 100 people).

We asked all respondents to clarify which field their organization primarily worked in, offering 12 choices plus “other.” The top four identified fields were Education, Health, Social Services, and Economic Development.

- Overall scores for data maturity in these fields were not remarkably different, with Health organizations garnering a slightly higher overall score of 5.5 and Social Services organizations reporting a lower score of 5.1.

- A look into the subcategory scoring shows Health reporting the highest scores in Infrastructure (6.8), Talent (5.9) and Security (5.9), while organizations focused on Education scored highest in Leadership (5.9), Ethics (5.8), and Culture (5.1).

- Some respondents filled in the field they worked in, but were reluctant to share their organization’s name: “prefer not to disclose”.

Almost 30% of responses are from individuals working in an executive role, second to a data role (35%). Unsurprisingly, the larger an organization is, the more likely the respondent is to come from a designated data role — something that is likely the purview of the executive in a small organizational setting.

- Executive, programmatic, data, and technology staff all agree – most of their organizations take a manual or only somewhat automated approach to analyzing data.

- We also asked whether senior leadership in social impact organizations are making decisions with data. Here we begin to see some divergence: executive respondents more frequently report that that data is less frequently used to make decisions. In contrast, respondents in data roles were more optimistic that data was used by senior leadership in decision-making.

We also asked about organizations’ ethical data practices. Regardless of role, the majority of respondents said that their organizations collected only data they intended to use, and that they tell people what data they collect, and why.

- We observed a disparity in how respondents reported that their organizations regularly monitor, update, and improve their data ethics policies and practices. Respondents in data roles were markedly more optimistic that this always happened.

Questions about how organizations use data to inform overall strategy included one about how data specifically inform financial and contracting decisions. Here we begin to see a discrepancy between the executive and programmatic personnel:

- A majority of respondents in executive roles reported that data always or often informs these decisions.

- Fewer respondents in programmatic roles agreed, with a substantial minority sharing that data never or rarely informed financial and contracting decisions.

These are initial observations only — but a few topline reflections:

- The breadth of organizations using the tool is remarkable. Our vision was to reach social impact organizations of all sizes, and we are seeing a broader global social impact community engaging with the tool.

- The field that organizations primarily work in matters, as does the individual role someone holds. There is more to learn in both regards.

Even these early observations prompt us to consider more areas for exploration into data maturity and its drivers. We are working with several social sector funders to discuss which ancillary, specific questions would add the most value, and to create a plan for the kind of services that would help organizations mature along their data journey, with approaches that serve to build the field overall.

About the Author

Perry Hewitt is the Chief Marketing and Product Officer of data.org where she oversees the marketing and communications functions, as well as digital product development.

Read more

Curious how your organization stacks up?

data.org In Your Inbox

Do you like this post?

Sign up for our newsletter and we’ll send you more content like this every month.

By submitting your information and clicking “Submit”, you agree to the data.org Privacy Policy and Terms and Conditions, and to receive email communications from data.org.