Overview

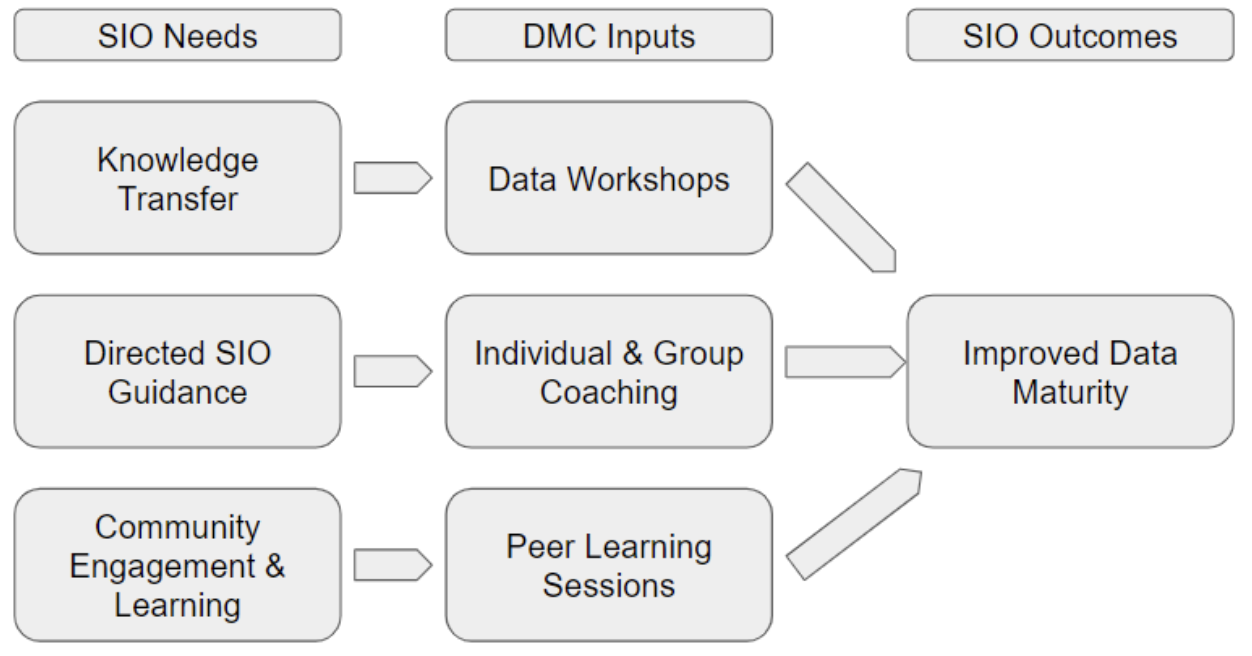

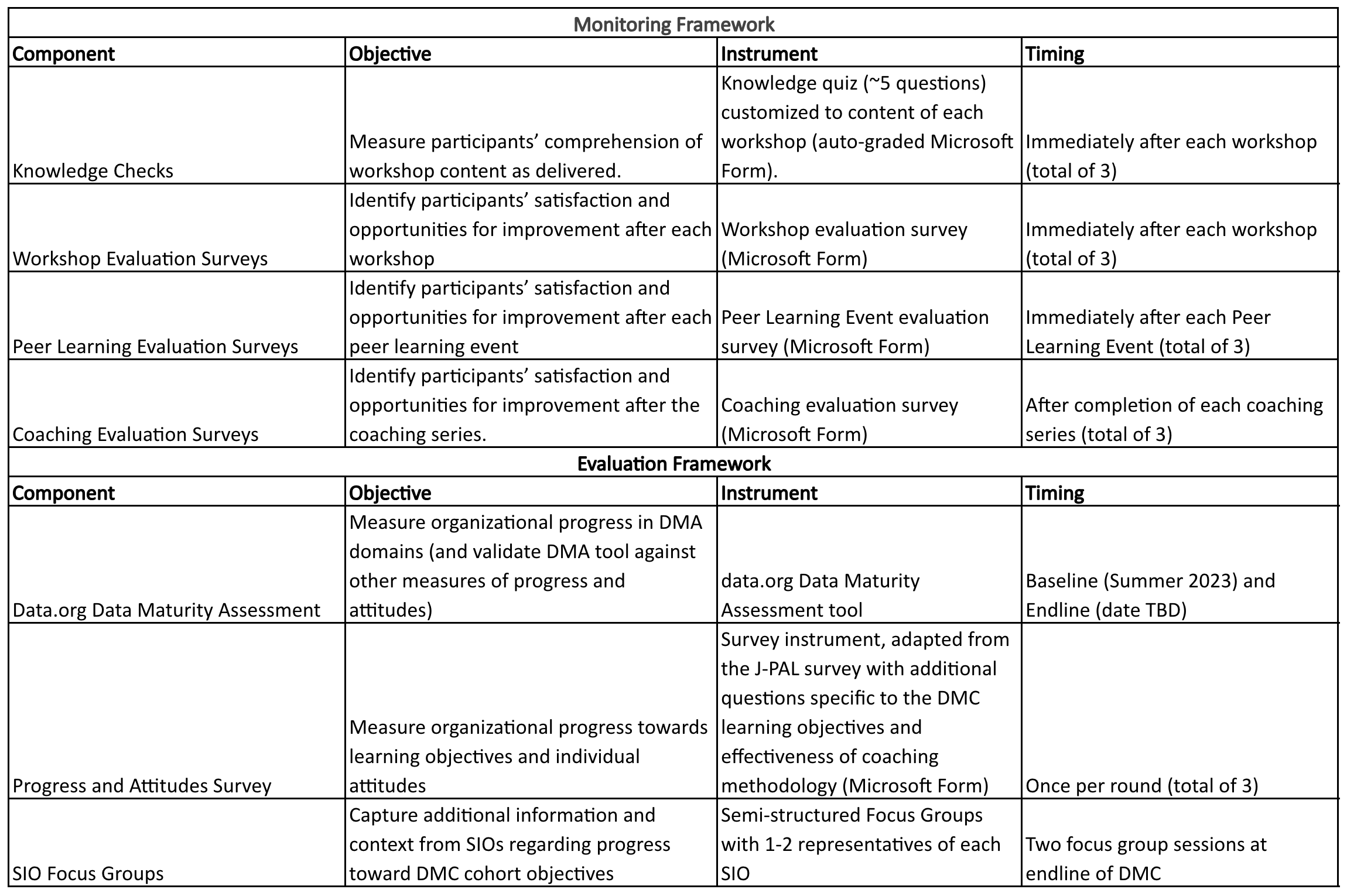

We recommend that DMC program development be underpinned by a robust Monitoring, Evaluation, and Learning (MEL) strategy. In our case, we drew inspiration from the data.org MEL framework while also incorporating program-specific research questions. This tailored strategy ensured that program initiatives were continually optimized and aligned with overarching goals. Key research questions in the MEL strategy focus on the identification of optimal learning modalities for fostering organizational growth, seeking to understand the most effective methods for knowledge dissemination and skill development. Additionally, the team examined how the DMC program contributes to individual and organizational progress, shedding light on the program’s impact on data capabilities and overall outcomes. This MEL framework equipped the team with the insights necessary to adapt and refine programs in response to real-time data, fostering ongoing improvement and success.

Progress and Attitudes Survey

In addition to the evaluations for workshops, coaching sessions, and peer learning events, we recommend conducting a Progress and Attitudes survey to identify areas of progress and assess which elements of the DMC are most valuable for participating organizations and individuals.

Key learning questions may include:

- Which elements of the Data Maturity Cohort were most useful for individuals and organizations?

- Were there any tangible advances in organizational data maturity attributable to participation in the Data Maturity Cohort?

A Progress and Attitudes Survey aims to evaluate individual attitudes and organizational progress during the Data Maturity Cohort. It could be administered twice during the cohort, at the midpoint, and at the end. The example survey consists of two sections: Individual Attitudes and Learning, and Organizational Progress. In the first section, participants rate various aspects of their learning experience on a scale from 1 to 5, assess their alignment with expectations, and indicate the impact on their skills and willingness to apply data security knowledge in future careers. The second section focuses on organizational benefits, rating parameters from 1 to 10, evaluating increased data capacity, and considering the creation of full-time positions to sustain the cohort’s initiatives. Participants are also encouraged to provide additional comments to improve future rounds.

Focus Group Discussions

Focus Group Discussions can help gather comprehensive qualitative feedback from participants of the Data Maturity Cohort (DMC). These discussions are useful for mining insights that will inform the design of instructional materials for future cohorts. Unlike progress surveys, focus groups allow for more in-depth and candid responses, providing a nuanced understanding of participant experiences and the effectiveness of different program components. The primary objectives are to identify which engagement methods—workshops, coaching sessions, or peer learning events—were most and least beneficial for personal development and organizational impact, and to understand the tangible changes resulting from the DMC.

We recommend that the focus group sessions begin with an introduction that explains the purpose of the discussion and assures participants of the value of their honest feedback. The sessions are structured to cover several key areas, each with targeted questions designed to elicit detailed responses. For example, participants can be asked to reflect on the usefulness of various engagement methods, the effectiveness of workshop activities, and their preferences between group and individual coaching. Additionally, the discussions explore the tangible outcomes of the DMC, such as new documents or policies created and specific organizational problems addressed.

Example discussion questions include:

Engagement Methods:

- Which DMC activities (workshops, coaching sessions, peer learning events) did you find most useful for personal development and for your organization as a whole?

- Which activities did you find least useful, and why?

Tangible Changes:

- Did the DMC result in any tangible changes for your organization?

- Did your organization create any new documents or policies as a result of the DMC?

- Did you address or resolve any specific problems at your organization due to the DMC?

Workshop Structure:

- What do you think of the workshop approach, which included presentations followed by structured activities?

- Did you find the activities useful, and is there anything you wish was different about the workshops?

Coaching Sessions:

- How did you feel about the coaching topics, and which was more helpful: group coaching or individual coaching?

- What considerations influence your decision to sign up for or attend a DMC event?

Program Duration and Scheduling:

- The DMC lasted nearly nine months with various short events spaced out. What did you think about this time commitment?

- Did the scheduling of the events align with your expectations and was it convenient?

- Is there any part of the DMC that you wish was longer or shorter?

These detailed discussions aim to provide actionable feedback that can improve the DMC experience for future participants. By addressing these key areas, the focus groups help to refine the program and ensure it meets the needs and expectations of social impact organizations (SIOs), ultimately enhancing their data maturity journey.